What to keep in mind when testing web solutions?

Testing web solutions, such as information systems, online stores, and portals, is much more complex and exciting than clicking through an application, which is often equated with testing. Perhaps this is where the misconceptions that anyone can conduct tests and that testing alone is not necessary come from.

In this article, we will not be describing the different types of testing; instead, we will focus on the methods chosen and provide specific examples of what the author, Trinidad Wiseman’s tester Tanel Tromp, believes has become increasingly important and what requires special attention when testing web solutions. In the following post, we will discuss usability testing, accessibility testing, and the automated testing of graphic user interfaces.

Usability testing

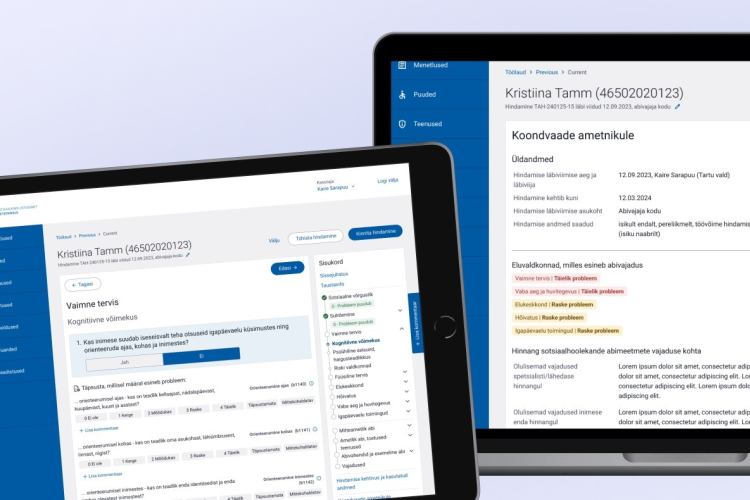

Usability testing is a subtype of non-functional testing that significantly impacts the user of the application since it includes testing the part that the user actually sees and where the actual interaction between the system and the user takes place.

This subarea may seem deceptively easy to test at first glance, as it is quite diffuse. In reality, it requires the tester to have an excellent theoretical knowledge of UX, i.e. the field of user experience, as well as the ability to represent the whole picture, empathy AKA the ability to step in the end user's shoes, and the ability to see things independent from one another.

So, the tester will definitely benefit from having a background in UX as well as experience and training in the field, and exposure to the latest trends. A tester who does not have this theoretical basis and no knowledge of good usability will not be able to recognise bad user experience because he does not hold himself up to a higher standard and thus, has no need to waive conformity.

User experience that leads to usability testing can be defined through various indicators, all of which have a strong correlation with each other. In addition to practical experience, it is important for the tester to establish a more or less clear definition for himself to work with so that he could then also form a systematic testing method for himself.

Here is an example of a three-component definition:

- What the web application looks like AKA design?

- What does using the web application feel like? In other words, how much subjective satisfaction does using the application offer?

- What is its usability like, i.e. how easy is it to perform the activities for which the web application is intended? This also includes various metrics such as navigation, learning curve, cognitive uptake etc.

How to test the design AKA what the web application looks like?

Testing requires design views which can be compared against the development. Otherwise, we cannot call this testing at all as it would instead be one person’s – the tester’s – subjective opinion on something.

It is not the responsibility of the tester to evaluate the design as such, and the tester should never take part in bringing up this topic. A sort of cliché humour example of this would be the question, "do you like blondes or brunettes?". After all, as a rule, the design is created by experienced UX/UI designers, and the tester's task is simply to ensure that the developed fonts, colours, styles, mobile, desktop, and tablet views match the design exactly.

In a properly conducted process, a solution is never developed or tested based on a static image or screenshot (e.g. png, jpg) or a Photoshop PSD file; instead, the styles (font-height, font-family, hex colour codes etc.) are easily accessible, visible and comparable via proper software (e.g. Figma).

How does it feel and usability testing

For "How does it feel?" and "usability" testing, it is possible to use a specific predetermined list as a basis. There will, of course, always be small differences between, for example, information systems, admin vs public views, and online stores. This approach could be compared to an aircraft's landing and take-off procedures in a way – once the list has been checked, a large number of problems and errors have already been ruled out.

Here is an example of a checklist that is worth going through in this category. Please note that this list may differ slightly for each tester:

- the product owner must have the broadest possible access to change the content of the application, such as text, titles, hint bubble content, element names, and button names, and they should not be hardcoded. The project budget, of course, sets limits here;

- the user interface buttons must be large enough and easy to hit with the mouse cursor;

- the entire application must be able to run entirely without the use of a mouse or keyboard;

- each page must have its own page title;

- A page where the content length is longer than can fit inside one view must have a scrollbar in all browsers;

- the page must be equally usable on different devices and resolutions. For example, the mobile view should not lose the ease of use available on the desktop view. Additionally, the principle of ensuring that the desktop and mobile views do not differ significantly from each other, for example, in terms of information structure, should be observed. This helps the user maintain their rapid cognitive learning speed when switching devices;

- there must be enough "air" between different topics, the texts must be aligned so that the content is easy to read, and related information groups must also be visually grouped together;

- the page must not contain typographical or spelling errors;

- the page must not contain broken links or images. You can use various web crawlers, which are already built into better testing software, or you can use certain websites, such as Atomseo

- the page must not use inactive buttons that cannot be pressed. Instead, error messages should be used to explain how to proceed in the application;

- it should be possible to go to the home page from all subpages via a link, preferably by clicking on the company’s logo;

- navigation must be well thought out and tested with end users, i.e. the user should be able to quickly understand where they are on the page, where they can go and how they can go back;

- the main menu must be accessible on each page;

- if possible, tooltips should be used to explain more complex topics to the user;

- in addition to navigating through the menu trees, the user must also be able to search for the content they are looking for through the search field. These user types are called navigators and searchers, accordingly.

- the application must be easy to learn, i.e. every time you use it, it gets easier and faster to perform the necessary activities.

Undoubtedly, the cherry on top of the cake for a good usability test is if the application is also tested on different end-users. In TWN, this is usually done by the interaction designers, not the testers, and users are recruited to conduct specific tasks during this process.

It is important to add that end-user usability testing and usability testing performed by a tester are not completely interchangeable, but complementary to one another.

In TWN, this is usually done by interaction designers, not testers, and in this process, users are involved in testing on the basis of specific tasks - several articles have already been written on this topic on our blog:

- Do not overtake anyone in a blind curve aka testing a prototype

- 10 mistakes to avoid when conducting website usability testing

- How do usability statistics supplement usability testing?

- What to consider when planning a usability test that includes people with special needs

Accessibility Testing (WCAG)

Accessibility is becoming an increasingly bigger priority within the European web development crowd. Many websites, such as those of public sector bodies, must meet an agreed standard of accessibility within a set timeframe.

Essentially, accessibility is also a question of usability but on a more specific level where people with special needs and elderly people are also included among the list of end users. From a testing point of view, this is much clearer than for simple usability testing as testing can be based on specific requirements and directives (the latest being WCAG 2.1).

However, one should keep in mind that the level to be tested for is fixed in the requirements – as a rule, this means the AA-level which also covers the A-level criteria. You can read more about accessibility here: How everybody benefits from accessibility.

There are many tools and techniques for testing accessibility, which are divided into the four main categories of perceptibility, usability, comprehensibility, and flexibility. This means that a lot needs to be tested which therefore makes WCAG testing very time-consuming in scope.

There are also tools available that try to simplify this task, such as AChecker and JAWS Inspect, but these alone are certainly not enough, and many different tools and methods must be employed to ensure compliance with the criteria.

There is a specific checklist that must be successfully completed in order to achieve the level that meets the requirements of WCAG 2.1. The following subtopics form a list of the main activities from the accessibility checklist and the methods for conducting them.

Going through a web application with screen readers and launching the corresponding software for the screen reader

While the average user mainly uses their eyes and a mouse and a keyboard as additional tools to aid them in navigating web applications, then visually impaired people use screen readers that read to them aloud what is happening in the web application.

A professional tester’s tools include at least three of the most popular ones in Estonia: VoiceOver (Apple), NVDA (PC) and JAWS (PC), which in turn are divided into combinations according to the operating system, device and browser being used, such as JAWS + Chrome, VoiceOver + mobile device and others.

Why use three different screen readers and different combinations for testing? The answer to this is quite understandable – screen readers and devices all behave differently when reading the code of a web application, and the application must provide access to all combinations as equally as possible. For example, we cannot assume that all users use Apple desktops and VoiceOver and decide to only test for accessibility in that combination alone.

Examples of some of the requirements for successfully browsing a page with a web reader:

- the first element of each web page must be a "Skip to main content" link;

- each website must have a descriptive and clear title (<title>);

- the language of the website must be indicated by the corresponding ISO code (e.g. lang = "en");

- photos must have alt labels attached to them;

- associated web elements must be grouped in HTML;

- information provided via images must have textual alternatives.

Going through a web application with a keyboard

This partly overlaps with the previous point, but with the key difference that the application is mainly navigated through with the "Tab" key along with the "Enter" key, the "Space" key, and the "Escape" key.

The tab key mainly moves between interactive elements, and the enter key and the space key select the highlighted element. Keyboard testing also tests whether the order of the elements matches the logical hierarchy and the content, i.e. it checks where the focus is moving from and where to.

Additionally, this test checks whether the element in focus is clearly distinguishable from other content by means of a corresponding highlight element.

Examples of keyboard testing requirements:

- It must be possible to move onto all interactive elements, such as buttons, links, and input fields with the "Tab" key;

- it must be possible to close any dialogues and modals with the "Escape" key;

- buttons must react to and open with the “Enter” key and the “Space” key

- links must open with the “Enter” key

- it must not be possible to exit modals with the "Tab" key, the so-called off focus content

Checking the contrast ratio of colours

An important part of accessibility testing, from the perspective of colour blind and older users, is the right combination of different colours in the design.

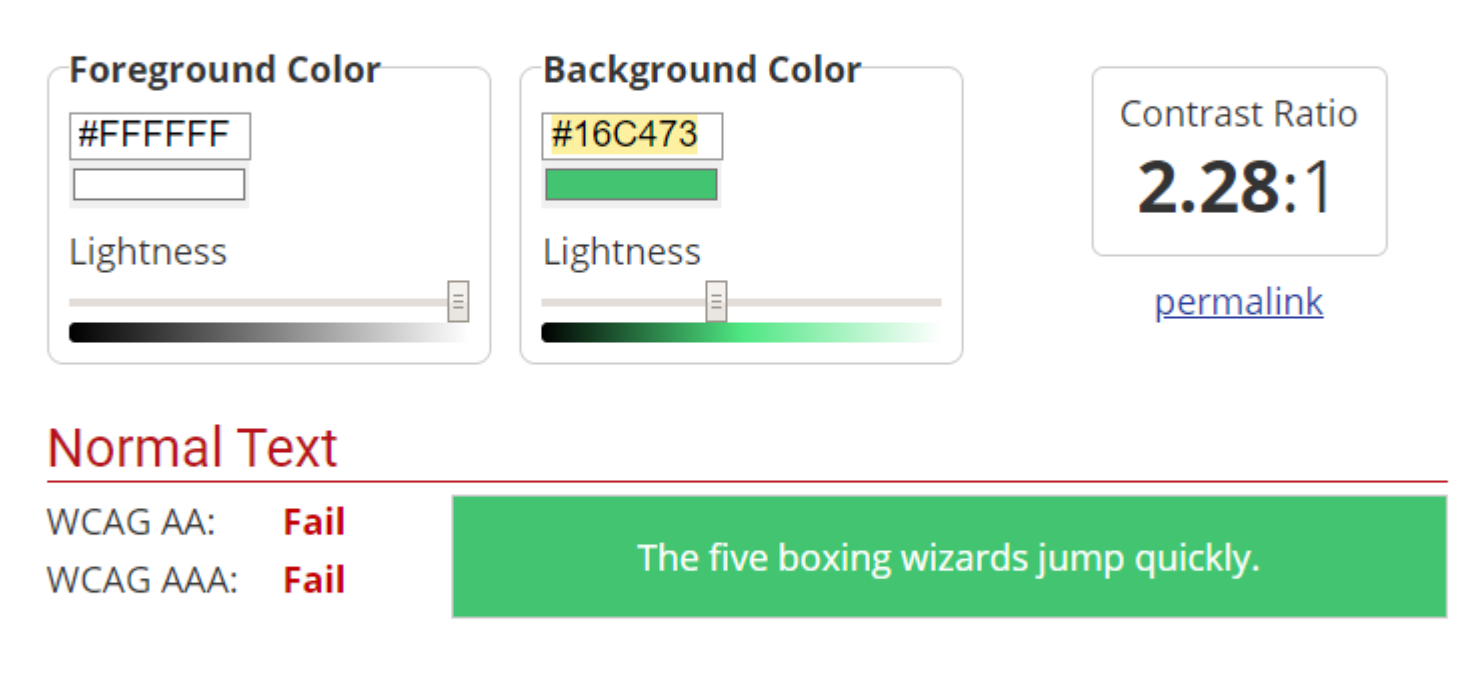

Another thing to check for is whether minimum contrast ratios to make the text clearly visible have been met, such as the usual 4.5:1 and 3:1.

Additionally, being able to distinguish between adequately contrasting colours becomes very important when working with outdated equipment that have poor colour reproduction. The WebAim Color Contrast Checker is an excellent tool for checking colour contrast and when combined with, for example, ColorZilla, the two can enable you to perform this check quickly.

Screenshot 1: An example of how the WebAim tool validates the different colours of normal-sized text according to the AA and AAA levels

Automated testing of web solutions

The popularity of automated testing has been following a steady growth trend worldwide, especially within agile development, mainly due to the growing share of microservices and apps. Some people have also become increasingly more vocal about how they think that most tests and most of the testing could already be covered by automated testing at the unit/component, API, and GUI (graphical user interface) levels.

However, it is unlikely that automated tests will be able to cover non-functional testing types, such as UX and accessibility testing, in the near future. When creating regression tests, having sets of automated tests is of great background help for the tester in his daily work.

In my experience, a tester’s work would not be very satisfying without them since in the case of large projects, it would not be sensible to run a large number of tests manually – that would take a considerable amount of time and effort.

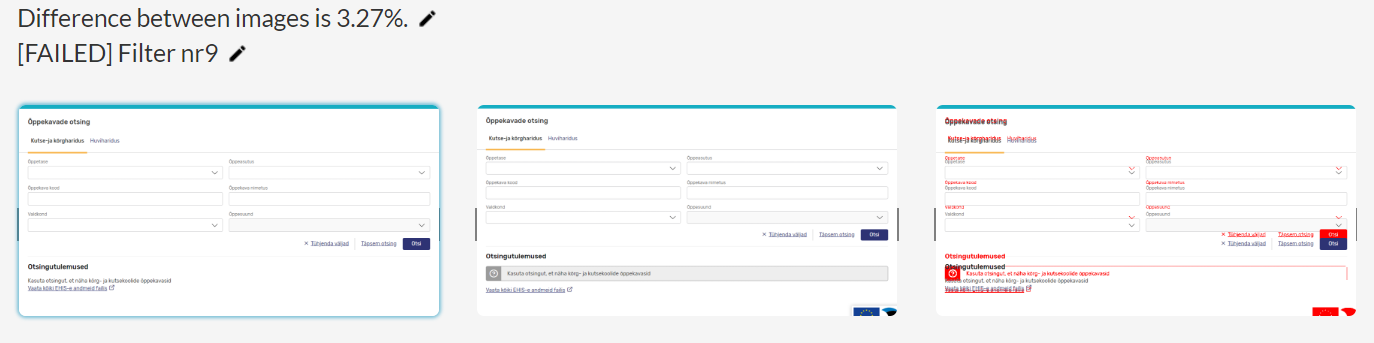

From the end user's point of view, in the case of web applications, the appearance of the app also plays an important role, not just whether a service runs in the background or not. Therefore, in addition to API tests, it is recommended to create automated GUI tests that check whether the similarity of certain elements is retained across specific time intervals and according to a similarity percentage determined by the tester. Thus, the test checks for any broken (changed) elements in the view.

The tester can also easily choose which device, screen width and browser he or she will run the test for – this way, the tester can check that both the mobile and desktop versions are working correctly on the desired combination of different browsers.

Today's automated GUI testing software is very powerful and allows you to create extensive and comprehensive tests if you are committed and willing.

An example of a graphical interface comparison result within an automated testing software

Essentially, GUI testing software, such as Selenium, EndTest, Katalon Studio, can conduct most of the required tests – it just depends on whether there is a point to using them and how much time it would take.

For example, it makes more sense to cover the operation of microservices, CSV file uploads, and large forms that can be filled out in many variations with automated API tests.

Here are a few random examples of what can be checked with automated tests:

- the condition and loading times of all links on the website;

- whether scroll memory is working, i.e. whether the user can see the location from where they went to the next view when moving back and forth;

- the styles of the content and the text, for example, whether the text retains a bold format somewhere;

- whether the navigation functions correctly;

- filling in and submitting forms;

- the operation of components in end-to-end mode, for example, whether the feedback provided by a user is sent from the user interface to the database in the correct format;

- whether the autocomplete function of the search engine functions correctly.

When creating automated tests, it is worth thinking thoroughly about which tests need to be automated and to what extent, and whether it is necessary to automate them at all.

Based on my experience, I can say that once you have started creating automated tests, it becomes very tough to imagine a complete testing process without the use of corresponding automated testing software.

There is no need to be afraid and think that creating automated tests requires a thorough knowledge of different programming languages – it does not and if necessary, anyone, with the help of the Internet or a colleague, can write a small snippet of JavaScript code!