Comparing results: automated vs manual accessibility testing

The accessibility requirements that will come into force from 28 June 2025 require service providers to assess the compliance of their digital environments with the European Union standard EN 301 549 V3.2.1. There are two options for evaluation: relatively resource-intensive manual testing versus attractive free automated testing tools. In this article, we'll explore the difference between automated and manual accessibility testing results.

Automated testing tools are useful for checking the accessibility of digital environments and provide a good starting point for identifying issues, but their capabilities are limited. High-quality software development plays an important role in accessibility, as our audits show that nearly 75% of accessibility issues stem from front-end code. Unfortunately, automated tests cannot detect all of these problems.

Automated tools are able to check whether some predetermined technical conditions are met (e.g., whether <input> is connected to <label>), but they can't see the big picture and can't understand the actual problems in the code (e.g. if the elements are grouped visually but not in the code).

What's more, automated tools can also bring out incorrect issues, highlighting problems that don't actually exist or, conversely, failing to identify real problems.

Accessibility is not just a technical requirement, but first and foremost a human-centred approach. Manual testing is necessary to understand how a web page or application actually performs for different users. Manual testing helps to uncover more complex issues and validate findings from automated tools.

Read more about accessibility on our website. Our specialists are happy to help you - contact us and order an accessibility audit, consultation or training.

Testing

Our aim was to find out the differences between the results of automated and manual accessibility testing. For testing, we chose the front page of an international public institution. We tested it with automated tools as well as manually. For automated testing, we used free browser extensions: WAVE Evaluation Tool, AXE DevTools, ARC Toolkit, and Siteimpove Accessibility Checker.

Manual testing for the desktop version was done using Chrome browser and JAWS screen reader, and for the mobile version on an iOS device with VoiceOver screen reader and an Android device with TalkBack screen reader.

Results

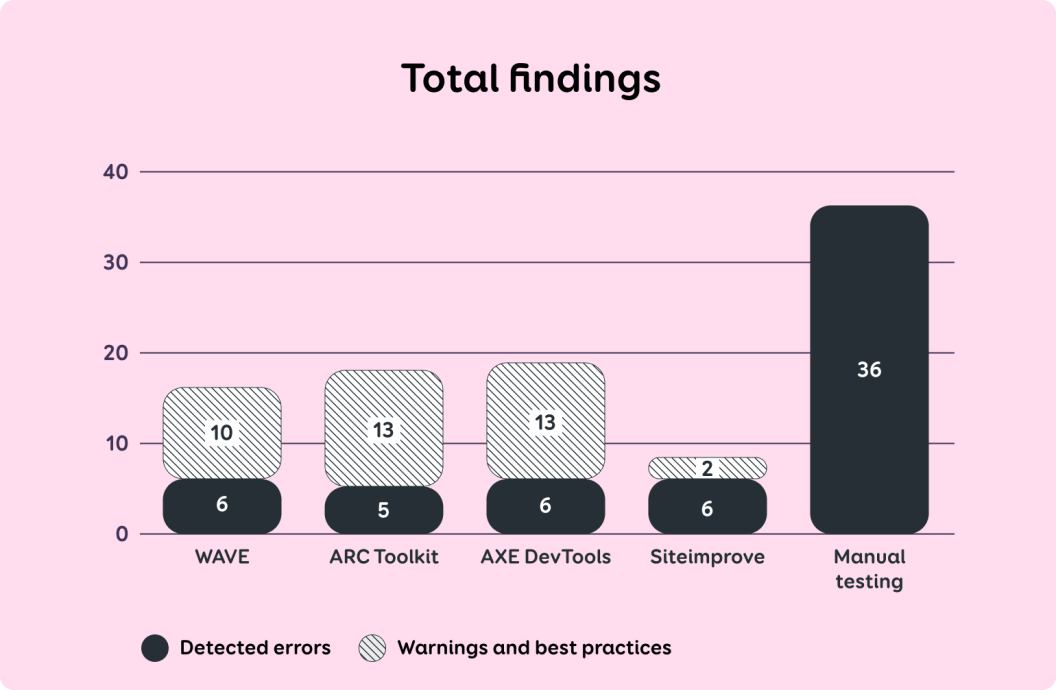

Figure 1. Total errors found

alt="Siteimprove found 6 errors and 2 warnings, ARC found 5 errors and 13 warnings, AXE found 6 errors and 13 warnings, WAVE found 6 errors and 10 warnings. Manual testing found 36 errors."

The summarised results in Figure 1 show that automated tools identified significantly fewer accessibility issues than manual testing.

However, the actual number of findings is even smaller, as most automated tools report the same error for each code element separately, which creates repetitions. In Table 1, where we display results by findings, we have grouped repetitive findings into one to make them more comparable to the results of manual testing.

For example, if the automatic tool pointed out four separate errors – one for each incorrect list element– then in Table 1 it is displayed as one error: "one list is read out by the screen reader as four separate lists".

Automated tools also highlight parsing errors, but these were not taken into account in manual testing, as in WCAG latest version 2.2, the "Parsing" criterion has been removed and does not need to be tested.

The results of different automated tools are also not fully comparable with each other, as each tool reports findings in a different way. Most automated tools also highlighted "warnings and best practices."

In these cases, it may be unclear whether it is a violation of an actual accessibility criterion or simply an opportunity to improve the user experience. If the manual test reported something as an issue, but automated tool put it under "warnings / best practices", we still marked it as “yes” in Table 1.

This shows that while automated tests bring up a variety of findings, they still require knowledge about accessibility and human revision to validate them. The following table shows the results of manual testing and whether automated tests found them or not.

| Criterion | Summary of finding | Manual testing | WAVE | ARC | AXE | Site-improve |

|---|---|---|---|---|---|---|

| Contrast (Minimum) | The contrast ratio of the text and the background on the button is below the required limit of 4,5:1. | yes | yes | yes | yes | yes |

| Bypass Blocks | There is no "Jump to main content” link that would allow user to go directly from the beginning of the page to the content of the page. | yes | - | yes | - | yes |

| Focus Visible | There is no visible focus style on the button. | yes | - | - | - | - |

| The focus style of the button exists but is practically invisible. | yes | - | - | - | - | |

| Focus Order | On mobile, the keyboard focus can move under the menu while the menu is open. | yes | - | - | - | - |

| The link is focused twice with keyboard which makes navigation slower. | yes | - | - | - | - | |

| Headings and Labels | The date format is ambiguous and not clear. | yes | - | - | - | - |

| Info and Relationships | The text in the table is read out as a link by screen readers. | yes | - | - | - | - |

| The list is read out by a screen reader as four separate lists. | yes | - | yes | yes | - | |

| An element is read out by screen readers as a list, but it is not actually a list. | yes | - | yes | yes | - | |

| Not all navigation elements are inside the navigation for the screen reader. | yes | - | - | - | - | |

| The page lacks regions that help screen readers understand and navigate the page. | yes | - | partially | yes | yes | |

| The code lacks the first-level heading <h1>. The headings are not hierarchically marked up in the code, which creates confusion for the screen reader user. | yes | yes | yes | yes | yes | |

| Keyboard | Users can't move onto the search results with the keyboard. | yes | - | - | - | - |

| Link Purpose | External links are distinguishable visually, but not with a screen reader. | yes | - | - | - | - |

| Label in Name | The visible text of the button and the text in the code do not match, which causes problems for both screen reader and voice command users. | yes | - | - | - | - |

| Meaningful Sequence | The focus of the screen reader jumps to an unexpected place. | yes | - | - | - | - |

| The same text is read out by a screen reader several times in a row. | yes | - | - | - | - | |

| Name, Role, Value | The screen reader doesn't say whether an item is in an open or closed state. | yes | - | - | - | - |

| The mobile menu covers the close button, and the menu cannot be closed with voice commands. | yes | - | - | - | - | |

| The selected menu item is distinguishable visually, but not with a screen reader. | yes | - | - | - | - | |

| Users can't select search results with a screen reader or voice commands. | yes | - | - | - | - | |

| Navigation is incorrectly marked up as a context menu bar. | yes | partially | partially | partially | - | |

| Non-Text Content | When an icon button is focused, a sequence of numbers is read out with a screen reader, which does not describe the function of the button. | yes | - | - | - | - |

| When the logo is focused, the screen reader reads out text that is not actually on the logo. | yes | - | - | - | - | |

| When focusing an icon, the screen reader reads out text that doesn't describe the meaning of the icon. | yes | - | - | - | - | |

| On Focus | The search box closes unexpectedly. | yes | - | - | - | - |

| Pointer Cancellation | The close button in the search box is activated when the mouse is pressed (not clicked). | yes | - | - | - | - |

| Pointer Gestures | Certain actions can only be performed by dragging your finger along a certain trajectory. | yes | - | - | - | - |

| Reflow | In the mobile view, things move on top of each other, and a horizontal scroll bar appears. | yes | - | - | - | - |

| Status Messages | The screen reader doesn't notify user when search results are displayed. | yes | - | - | - | - |

| When the search icon is clicked, a search box opens, but the screen reader doesn't notify the user that it happened. | yes | - | - | - | - | |

| Text Resize | When using browser magnification, things shift on top of each other. | yes | - | - | - | - |

| Use of Color | Links are distinguished from the rest of the text only by a different color. | yes | - | - | yes | - |

| User preference | The text doesn't respond to the browser's font size settings. | yes | - | - | - | - |

| The page foes not respond to the browser's dark mode settings. | yes | - | - | - | - | |

| Total | 36 | 3 | 7 | 7 | 4 |

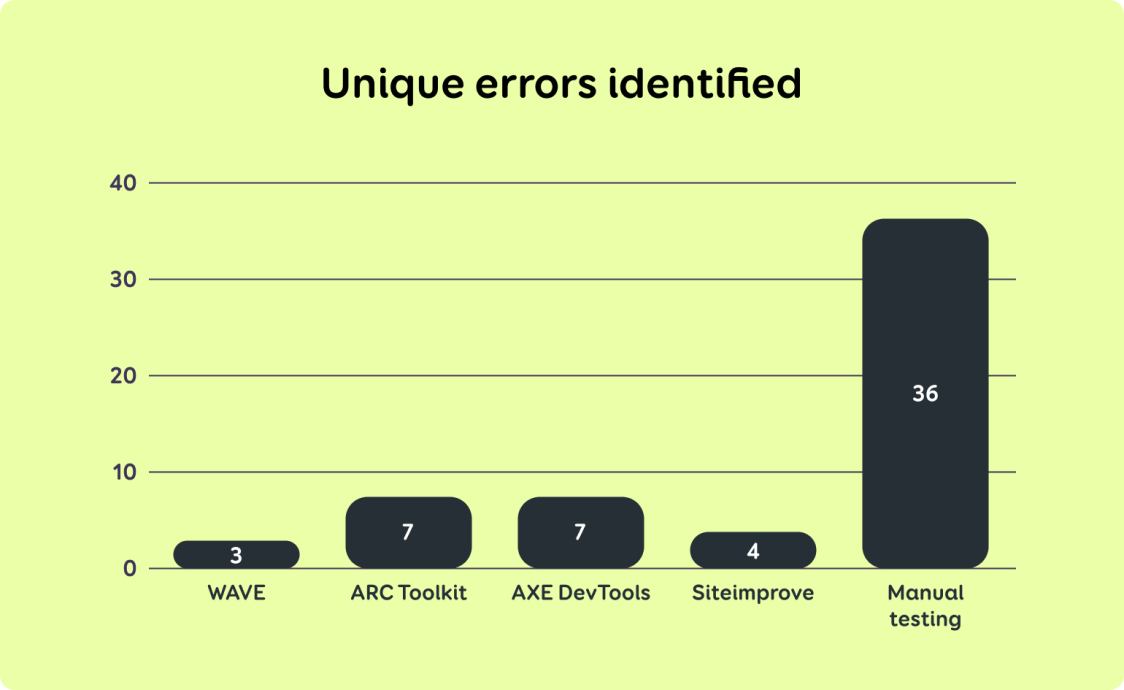

Figure 2: Unique errors.

bottom="Tools found respectively 3, 7, 7 and 4 unique errors. Manual testing found 36 errors."

Table 1 and Figure 2 clearly show that automated accessibility tests identified very few accessibility issues compared to manual testing.

In most cases, automated tools were unable to detect problems related to screen reader behavior: for example, situations where screen reader read out text that did not describe the element, or where important messages were not read out automatically.

The automated tools also did not detect issues with focus visibility and order, which primarily affects people who navigate using keyboard or switches. For example, it was not detected if the keyboard focus was invisible, moved illogically, or jumped to an unexpected location.

Three out of four automated tests also reported findings that were not reported during manual testing. We have highlighted these findings in Table 2 together with reasons why they were not reported in manual testing.

| Wave Evaluation Tool | ||

|---|---|---|

| Criterion | Summary of finding | Our comment |

| Contrast (Minimum) | The contrast ratio between the text and the text background should be at least 4,5:1, but is 1:1. | Visually, the text is white and the background is dark blue, which actually has a contrast ratio of 14.07:1, which is well above the required limit. In the code, the background color is added with inline CSS, which the automatic tool "can't see". |

| Undefined | The text in the title attribute is the same as the visible text or alternate text. | The error was not detected during manual testing, as in this context it did not affect the screen readers and did not cause confusion for sighted people either. |

| ACE Toolkit | ||

| Criterion | Summary of finding | Our comment |

| Labels or Instructions | A non-interactive element has a title attribute. | The error was not detected during manual testing, as in this context it did not affect the screen readers and did not cause confusion for sighted people either. |

| Undefined | The text is formatted using italics in CSS. | The visual style of one line of text in the footer was not considered a mistake in this context, because italics were not used to emphasize anything. |

| Siteimprove Accessibility Checker | ||

| Criterion | Summary of finding | Our comment |

| Target Size (WCAG tase AAA) | Interactive elements should be at least 44 x 44 pixels in size but are smaller. | Manual testing was based on the European Union standard EN 301 549, which does not require compliance with WCAG AAA level rules. If the client requests, we can also test digital environments against WCAG AAA rules and recommended best practices. |

| Info and Relationships | The table has a footer element, but it's empty. | This was not revealed during manual testing, as it does not affect the operation of the screen reader and does not disturb other users either. |

Table 2 shows that some of the findings reported by automated tools are not actually errors that would significantly affect the user experience or accessibility. This confirms that blindly trusting the results of automated tests can lead to inefficient use of resources, shifting attention to issues that are not actually an obstacle for users.

Therefore, it is important that the results of automated tests are complemented by expert review and manual testing so that resources are spent on solving real accessibility issues.

To summarise

All of the automated accessibility testing tools used for testing were equally effectively and were able to identify an average of 15% of accessibility issues on the website being tested, missing the majority of important issues.

In our opinion, one of the reasons is that when using a website or application, the user performs various actions such as pressing buttons, entering text or enlarging the page. These actions trigger various changes on the page, such as opening modals, accordions, or notifications.

However, automated tools do not have the capacity to simulate all these activities or analyze the changes that occur due to them. However, if user behaviour and context are not taken into account, many accessibility problems are overlooked.

There may be automated tools on the market that can also detect slightly more accessibility errors than the tools mentioned in this article. Nevertheless, we believe that there is still a long way to go before automated tools can replace manual testing – this will not happen anytime soon.

If you want to find out how accessible your digital channels are, talk to our accessibility specialist – a 30-minute conversation will help you see the situation more clearly and understand where to start. You can book a free consultation quickly and conveniently online.