How a leading global sports technology company improved its user experience

An accessible web or mobile app can be used by as many people as possible, with or without special needs. Accessibility improvements benefit everyone for example, clearer text colours make content easier to read, and well-structured headings help users find information more quickly.

With this in mind, a leading global sports technology company turned to our specialists. In addition to creating immersive experiences for fans and bettors, the company also safeguards sport by detecting and preventing fraud.

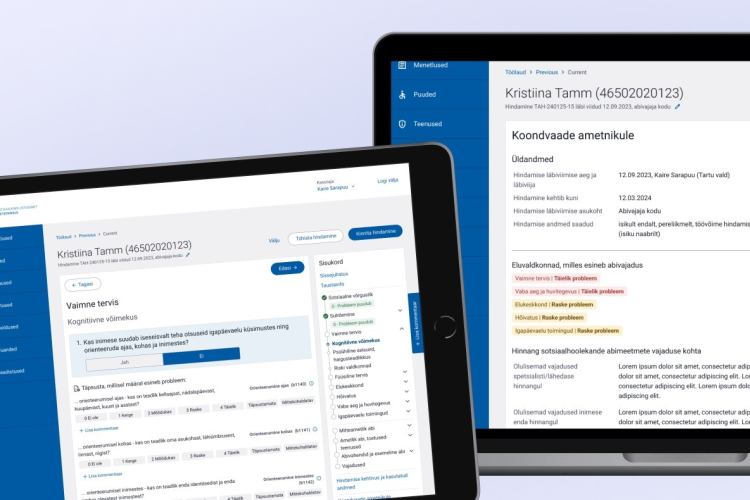

They asked us to evaluate the accessibility of their e-learning program, which focuses on athlete well-being. The program includes instructional videos on recognising warning signs and guidance on appropriate behaviour. After watching the videos, participants answer control questions and, if successful, receive a certificate.

The company also wanted us to review the accessibility of its mobile application for both Android and iOS. Through the app, users can submit reports of misconduct in sport, such as corruption, bribery, or gambling addiction.

At Trinidad Wiseman, we focus on analysing and improving the accessibility of digital environments to ensure they are usable for the widest possible range of users. Our services include accessibility audits, user testing, training, and consulting. Learn more about our accessibility services and get in touch with us.

How accessible is the environment?

To determine accessibility, websites and mobile apps must be tested. This is done against the Web Content Accessibility Guidelines (WCAG) and/or the European standard EN 301 549, which incorporates WCAG 2.1 AA requirements. For this project, we tested the sports technology company’s environments against EN 301 549 as well as the updated WCAG 2.2 AA requirements.

How is accessibility tested on websites and mobile apps?

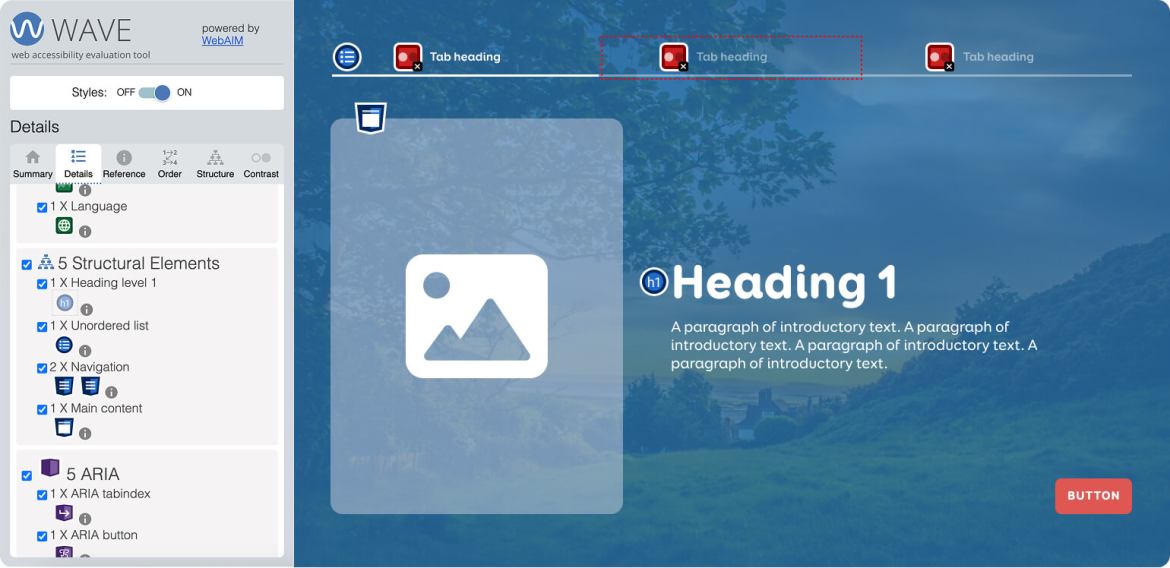

Part of accessibility testing involves automated tools. For example, the WAVE Evaluation Tool can identify issues such as insufficient text contrast.

These tools can also check the page structure: whether lists, tables, and navigation elements have been coded correctly, and whether heading levels follow a logical hierarchy without being skipped.

For the client’s e-learning program, we used WAVE to test text and user interface contrast, as well as page titles. We also used Adobe Acrobat Pro to test PDF documents, verifying whether titles were added, the document language specified, and other accessibility features.

However, automated tools alone are not enough, as they cannot detect every issue. Read more about the comparison between automated and manual testing in our previous blog. Accessibility testing must also involve manual methods, using a keyboard, mouse, screen readers, and voice commands.

A screen reader is a program that reads aloud the content on the screen, enabling blind or visually impaired users to access the information. It indicates whether text is a heading or a list and reads out images when they have descriptive alternative text.

For the e-learning program, we tested the desktop version with:

- NVDA screen reader (Windows) with Firefox.

- JAWS screen reader (Windows) with Chrome.

- VoiceOver (macOS, built-in) with Safari.

Since the program can also be completed on mobile, we tested it with:

- TalkBack (Android, built-in) with Chrome.

- VoiceOver (iOS, built-in) with Safari.

For the mobile app, we used TalkBack and VoiceOver, as well as voice commands (Voice Access on Android and Voice Control on iOS) to check whether users could perform actions such as pressing buttons (“Click login”) or entering text into fields by voice.

We also connected a physical keyboard via Bluetooth or cable to navigate with Tab and arrow keys and activate elements with Enter or the space bar. In addition, we evaluated the overall structure, colour contrast, and behaviour when system-level text size and contrast settings were changed.

The project work was divided into six stages

Sampling

First, we selected the pages and flows to be tested. Since these environments have specific user journeys, we decided to cover the entire flow of the e-learning program from registration and login to completing the program and obtaining the certificate.

For the mobile apps, we focused on the main user flow of report submission, as well as some key views such as FAQs and app settings. Throughout the project, we kept the client informed by sending monthly summaries of completed work.

Testing the e-learning program

Once the sample was confirmed with the client, we began testing the e-learning environment. As the program was designed to teach the principles of fair sport, we primarily needed to test its instructional videos.

For videos, the main checks were whether subtitles were available for hearing-impaired users and whether audio descriptions had been added for visually impaired users. We also tested the different types of control questions, which included:

- multiple-choice questions using checkboxes and radio buttons,

- drag-and-drop exercises where terms had to be matched with their explanations.

In addition, we tested the graduation email and the certificate PDF. Together with the client, we decided to conduct the email testing in Gmail and, similar to website testing, we used a keyboard and screen reader to assess structure for example, whether headings clearly described the content that followed.

For the certificate, we used Adobe Acrobat Pro to check whether the document was scalable across different screen sizes, alongside screen readers and keyboard navigation.

Testing the mobile app

After completing the e-learning program testing, we moved on to the Android and iOS applications. In cooperation with the client, we received access to test versions of the apps, which allowed us to create sample reports and test their accessibility.

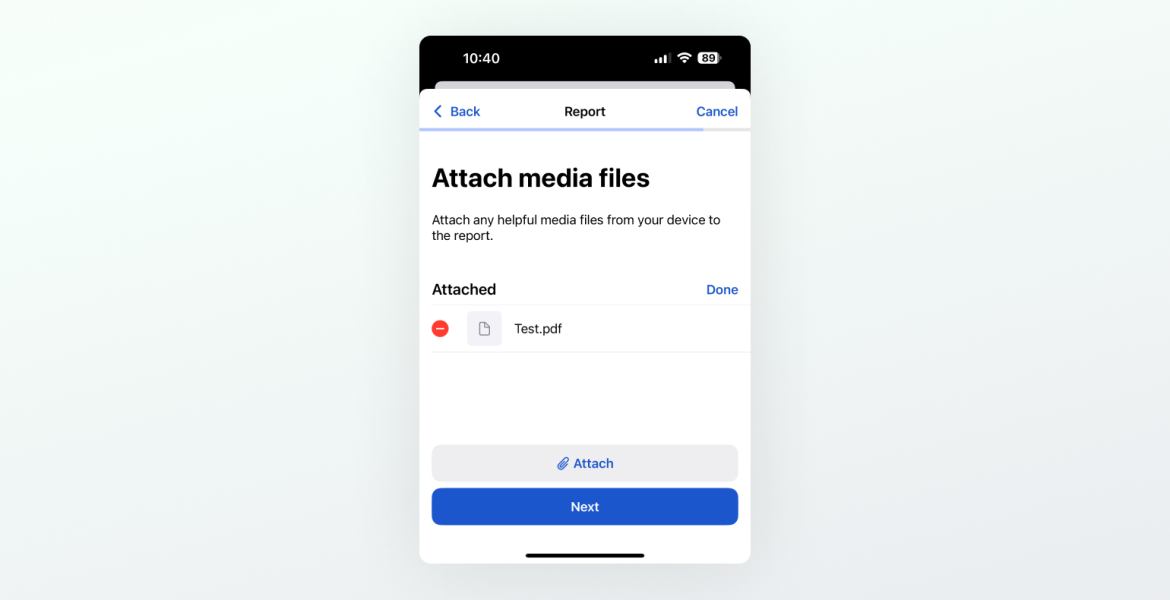

As the main purpose of the app was to submit reports of dishonesty in sport, we tested every step of that process. This included multiple-choice questions, plain text form fields, and the option to upload files.

Preparation of reports

We created comprehensive reports for both the e-learning environment and the mobile applications. Specifically, we tested:

- 92 requirements for the e-learning program,

- 44 requirements for the certificate, and

- 105 requirements for the mobile app on both platforms.

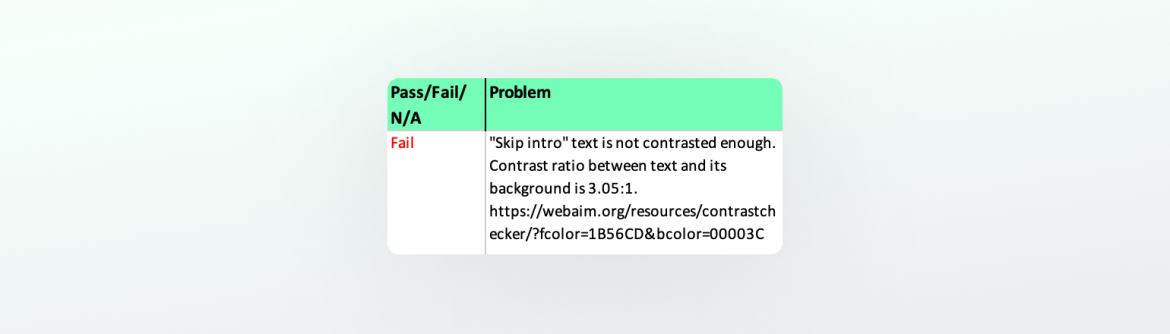

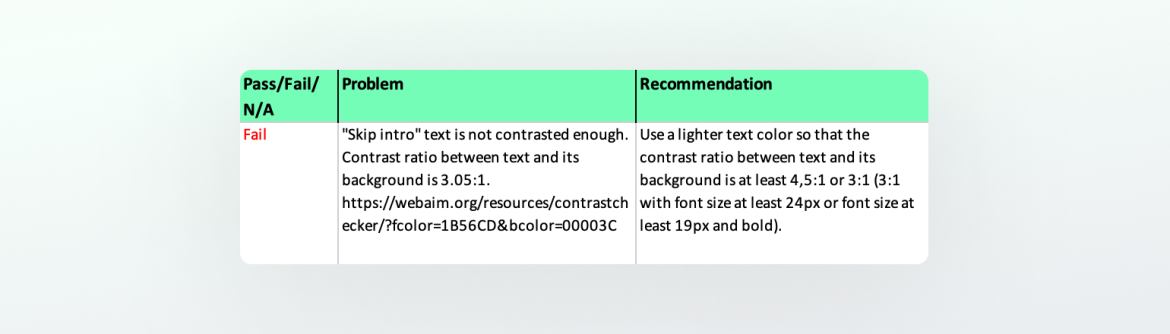

For each WCAG 2.2 AA or EN 301 549 requirement, we indicated whether the environment complied or whether deficiencies existed. To make results clearer, we used colour coding:

- Green – Compliant (Pass).

- Red – Non-compliant (Fail).

- Black – Not applicable (N/A), for example if no videos were present on a given page.

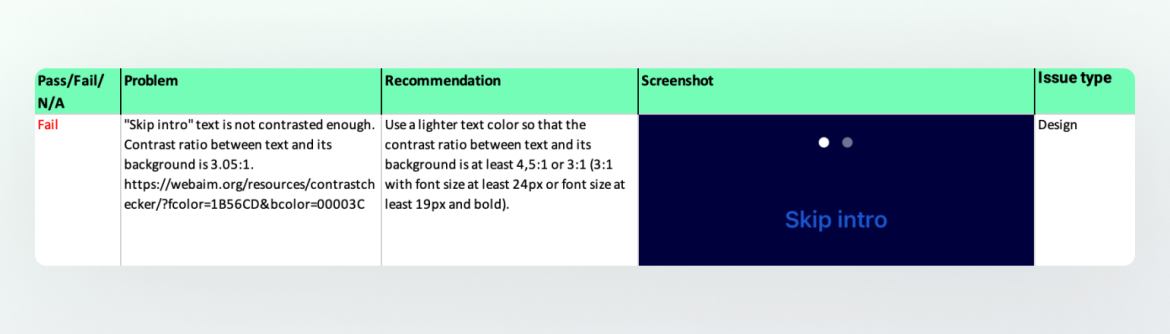

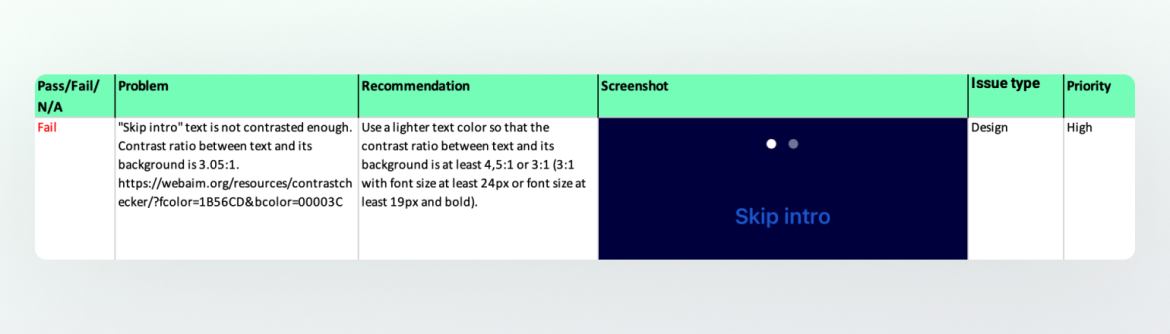

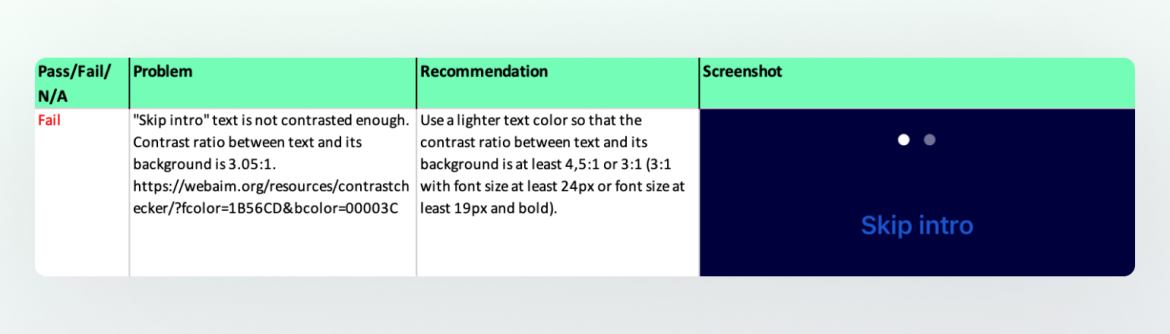

For each deficiency, we added five details:

- A clear description of the issue, explaining why the requirement was not met.

- An initial suggestion for how to fix the issue, to guide developers, designers, or content creators.

- Screenshots showing the problem area, where relevant.

- An indication of whether the issue was technical, design-related, or content-related, helping the client direct fixes to the right team.

- A priority level, based on how much the issue hindered or blocked use of the e-learning program or app.

Presenting the results to the client

Once testing was complete and reports prepared, we created a presentation to share the findings with the client and highlight the most common issues.

For example, we found that some texts visually resembled headings but were not marked as heading elements in code. We also identified accessibility gaps that significantly hindered usability, such as UI elements without visible focus styles — making it unclear which element was currently in focus when navigating with the keyboard.

To highlight such issues, we reviewed the reports again and identified the main bottlenecks. During the presentation, we discussed the results with the client, and the developers of the e-learning program and mobile apps were able to ask detailed questions. Afterward, we provided the client with full written reports to support the correction process.

Planned follow-up activities

Once the company has corrected the identified issues, we will re-test both the e-learning program and the mobile apps to confirm that the deficiencies have been resolved and that no new problems have been introduced. As with the first round of testing, we will prepare a detailed report and present a final summary to the client.

Risk mitigation methodology

The project plan and scope were carefully designed, ensuring a realistic schedule and pace for testing. Nevertheless, it is important to set clear milestones for the team performing the work. Without them, there is a risk that tasks may be delayed until just before the deadline, creating unnecessary stress and jeopardizing delivery.

In this project, the risk was mitigated by providing monthly progress summaries. These updates allowed us and the client to track whether the work was proceeding as expected and within the agreed timeframe. We also shared summaries with the client's team to maintain transparency.

Conclusion

In collaboration with the sports technology company and Trinidad Wiseman's development team, we improved the accessibility of their e-learning environment and mobile apps so that as many people as possible can use them, thereby helping to make sport fairer.

Because the client’s team addressed issues continuously alongside our testing, people with special needs can now also learn the principles of fair sport through the e-learning program, recognise warning signs, and report cases of dishonesty in sport via the mobile application.

As an added benefit, several developers and designers both in Estonia and internationally are now more aware of accessibility and how to design and develop accessible websites and applications.