Neuro UX: Smoke and Mirrors or the Future of User Experience Research? Aga Bojko, Associate Director, User Centric Inc.

Make sure to follow this blog in order to get quick updates on what`s going on at the event. I will be blogging from the event live, so if you see a typo or two then please be gentle with me.

Aga has been focusing on integrating eye movement analysis into traditional UX research to obtain actionable insights used to improve products and make valid data based business decisions. She has more than ten years of experience with various types of eye trackers that she has used to conduct studies.

Aga is known worldwide as one of the leading eye tracking specialists. When it was suggested that she should talk about the future of UX, but she felt that the topic is too vague and too broad. Instead, she decided to talk about the emerging UX research methods.

Today she will share her thoughts on neuro UX.

Starting off, we tend to do a lot of stuff under the umbrella of user experience

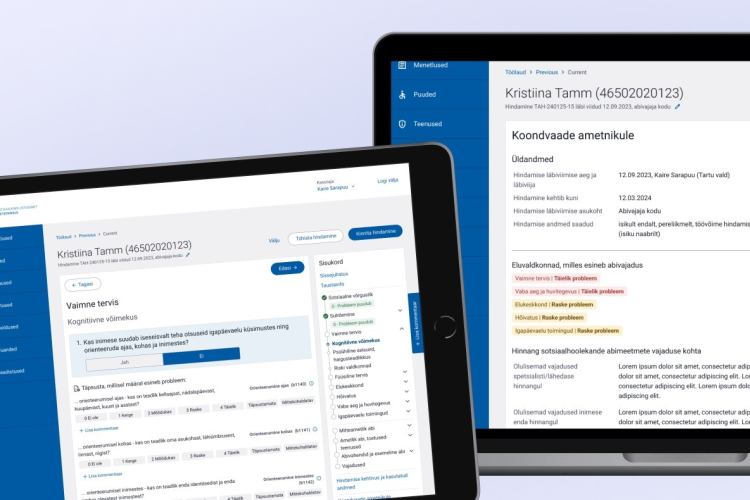

She noted that the old school research methods are not going anywhere, but the UX field as a research field is maturing. This means that there are a lot of products and options out there for conducting usability research and experiments in a new and improved way.

So how do we measure user experience?

Experiences must exceed expectations, but how do we measure this? Definitely not with a questionerre. These days researchers are digging deeper and are starting to use sophisticated instruments in order to go beyond the obvious and understand underlaying biological factors.

Recognizing facial expressions

Face muscles indicate emotions and the research goes back to the works of Darwin and Ekman.

Facial electromyography (fEMG)

Electrodes are attached to face muscles. Researchers have discovered that when tasks take longer, there is a lot more activation in certain areas of the brain.

This type of testing is a little too much for the average subject. You need complex equipment and the whole process is unpleasant and takes quite a lot of time. Video based solutions are considered a lot less intrusive and all you need for them is a simple webcam and professional software..

EmoVision dashboard is one of the possibilities of facial recognition for analyzing purposes. People can be sad and puzzled, neutral and happy, even surprised. This kind of software recognizes the subject`s emotions and lets us analyze them further. It will also generate a suitable graph.

FaceReader dashboard is useful on measuring overall emotional Intensity on scale of 0 to 1, but often enough a lot of emotions are going on at the same time and the software can only detect the strongest of them. The problem with these kinds of applications is that they are not ready yet.

This whole section makes me wonder if it suitable for us Northern people as we tend to be stone cold when it comes to emotions.

During measurements, the subjects need to be completely still and must not talk or move their head. Facial touching is generally not allowed and this method does not work well with glasses. It requires more lighting and only recognizes the simplest and strongest of emotions. Subtle emotions are ignored.

Measuring brain activity

We already know how the brain functions and how certain parts of it links to emotion and vision. But which areas react to design?

One of the methods for measuring this kind of activity is functional MRI. With it, we can see how a person responds to something, but it`s very energy consuming, loud and you can`t really move the machine even if you want to.

The challenge however lies in the fact that the brain is always on!

An important milestone is the fMRI study of Coke vs. Pepsi published in Neuron, 2004.

During the study, participants were put in the MRI machine. One group got Coke, while the other got Pepsi. Sometimes they were shown which brand they were drinking and sometimes only a faint light, making it hard to recognize what exactly they were currently tasting..

This allowed researchers to strip away already established opinions on the drinks. So how did the brain react? In the study they found a direct link with the brand and overall taste. What`s even more surprising, Pepsi did not trigger a brand reaction at all. This experiment lead to a new testing formula: Cued brain activity - light cued brain activity = adequate result.

EEG= electroencephalography

Electrical data, doesn't show valence, but showing it seems to be possible. Researches have found out that there is more activation on the right side of the brain during negative emotions and more activation on the left side of the brain during positive reactions

This method offers better temporal resolution and gets you real time ( with millisecond precision) results. This measurement utilizes conductive gel.

Emotiv headset, EEG System, electroencephalography (dry EEG)

No gel is used and it is really not that complicated. The problem however, is poor spatial resolution.

After introducing these various testing methods and devices, Aga came to the conclusion that we must bridge the gap between cognitive neuroscience and neuro UX.

Eye tracking for user experience.

Some time ago, eye trackers were not easy to use. Only people who knew about them, could use them. But recently things have changed. The devices have become very easy to use and as a consequence there is a lot of misuse. Even a child can put together a basic heat map.

So why do the eyes move and why should we care?

Eyes move to bring things into focus. Looking at something means that you are paying attention. This is the eye-mind hypothesis.

Portable eye trackers are now used with glasses to get real and credible results on the fly. Heat maps in general are not good for data, but gaze points can be used for reliable qualitative analysis.

The general problem with eye tracking is that seeing something does not mean the user knows what he is supposed to do with it. Because of this the person carrying out the test must keep instructive messages loud & clear.

Pupil size is linked to emotional arousal (it`s not clear why)

Problems:

- Ave fixation length - processing problems can happen with test subjects who wear mascara and heavy make-up. Scanners can be confused with the pupil and many labs offer make-up removal.

- Droopy eyelids - can cover the pupil glasses cause extra reflections and shadows and air bubbles can cause multiple errors. Dynamic content often leads to long analysis. Also, mobile devices can be hard to track.

We are seeing trend towards the combined usage of EEG and eye tracking.

So what does this all mean for user experience designers?

Aga believes that we need to be careful with the new methods, inquisitive and at the same time unafraid to use them. She closes with the message that we should all cautiously embrace Neuro UX and use scientific data which is solid and proven.