Interviews with WUD 2020 speakers: Hadar Maximov’s recommendations for designing emotional robots

Hadar Maximov is a senior UX designer who specialises in emotional design and human-machine interaction. Today, Hadar is a lead UX designer at Amdocs XDC, a global corporation specialising in the telco and media industry.

She was one of the speakers at the 2020 WUD conference and talked about why it is important to design an emotional connection between humans and robots. In this interview, we wanted to know more about that intriguing topic.

For those who missed your talk, could you please briefly recap why machines and humans should have an emotional connection?

Well, emotional connection is an additional layer to the user experience. Taking the user from point A to point B will cover the basics but adding emotions to that journey will create a better bond between the machine and its owner. Eventually, these extra layers in the product experience will make customers buy more from the same company.

What are some examples of robots creating a really good emotional connection?

A great example that comes to mind is the care robot for the elderly called ElliQ, which is made by a company named Intuition Robotics.

This robot, which is also a personal voice assistant, works proactively to make the lives of elderly people less lonely by giving them everything they need to stay sharp, connected and engaged.

For example, ElliQ can send health reminders, respond to questions, play cognitive games, stream music and connect with friends or family.

While these features have been around us for a while in different devices and applications, most of them require that users first learn how to use them, which easily creates barriers in the digital divide.

"The exceptional thing about ElliQ is the way it interacts with humans. It is natural, uses body language, gestures – things we understand intuitively."

ElliQ care robot for the elderly (photo: Intuition Robotics)

How clearly, if at all, should users understand that they are interacting with technology? Should we treat robots like machines, humans or pets?

At its 2018 I/O conference, Google presented a new update for their voice Assistant: a new feature called Google Duplex that can make phone calls for you and talk to the person on the other end to schedule appointments and reservations.

It was mind-blowing and scary at the same time. The voice assistant sounded so real that you couldn’t tell that it was actually a robot’s voice.

In general, we do like to know whether are having a conversation with a robot or a human, because we are not used to interacting with robots on a daily basis.

But as this technology is integrated more and more into our lives and we start to feel its benefits, we will also start leaning more towards frictionless communication. It just takes some time to get used to robots in this context.

What are the boundaries of using emotions in human-robot interactions?

Every company should decide on their boundaries based on the culture and customers they are targeting, but we should never intentionally create robots that will be treated disrespectfully.

"I’m not expecting machines to become our friends and robots should still be robots, which means that the target audience, the scenario and the environment should all be taken into serious consideration when setting the boundaries."

What are the main guidelines to follow when designing an emotional robot? Based on your experience, what are the most common pitfalls?

You should start by understanding your target audience and build the right character based on their needs and expectations. For millennials, the robots could be friendly, funny and have unique human-like characteristics.

On the other, elderly people generally don’t want robots to be like humans since they are not used to them. They expect to have a respectful and more distanced relationship with the machines.

Not all products have to be engaged and motivational all the time, so make sure you don't force emotions. Instead, be sure to keep empathy and lightness.

"Also think about all the imperfect moments, because these make the experience whole. For example, badly designed error states in chatbots or robots can devalue any previous experience quickly and reveal the true face of the robot.

By designing edge case scenarios, you will avoid awkwardness and create special moments."

The size of the device is also something that affects how humans perceive technology. Bigger robots might feel overwhelming or even a bit scary, so you might consider giving them a warmer voice tone and make sure that their gestures match their size.

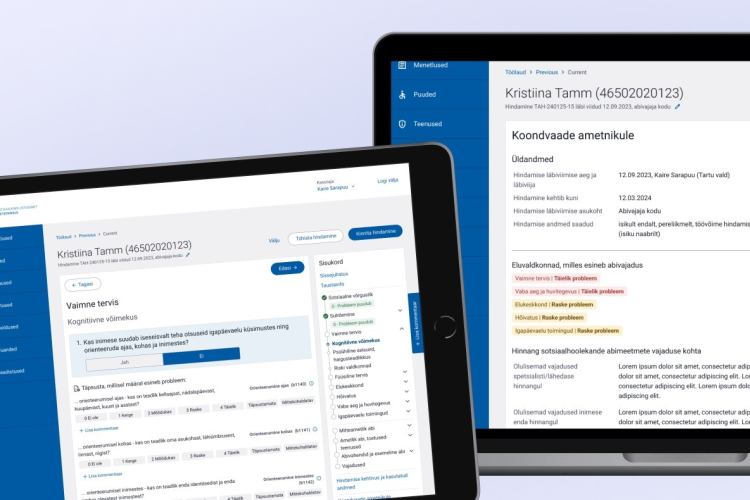

An increasing number of businesses are implementing chatbots to handle customer queries. How to make these bots more emphatic and engaging?

Understanding the scenario will help bring more empathy and emotions to the conversations. For example, imagine you have a problem when using some service, you're frustrated or maybe even mad at someone and now there is a chatbot representing the company.

If the chatbot is designed well in this scenario, it can help you calm down and comfort you by letting you know that it will do anything to help you.

It just needs to show empathy and compassion for the other side by using phrases like "I understand your situation" or "Don't worry! We will fix this together".

Use motivational and engaging words, treat the customer with respect but don’t be too flattering. Also, have the chatbot provide time estimates for how long dealing with the problem will take and make sure it asks the user the right questions.

A conversation between Lemon insurance talking robot and a customer (screenshot: lemonade.com)

What are some interesting discoveries you’ve found when designing human-robot interactions?

Presenting problems and difficulties that a device is experiencing brings out a lot of empathy in us as humans and we need to use that if we want to create a better bond between robots and humans. But this only works if machines also know what to do after an error occurs.

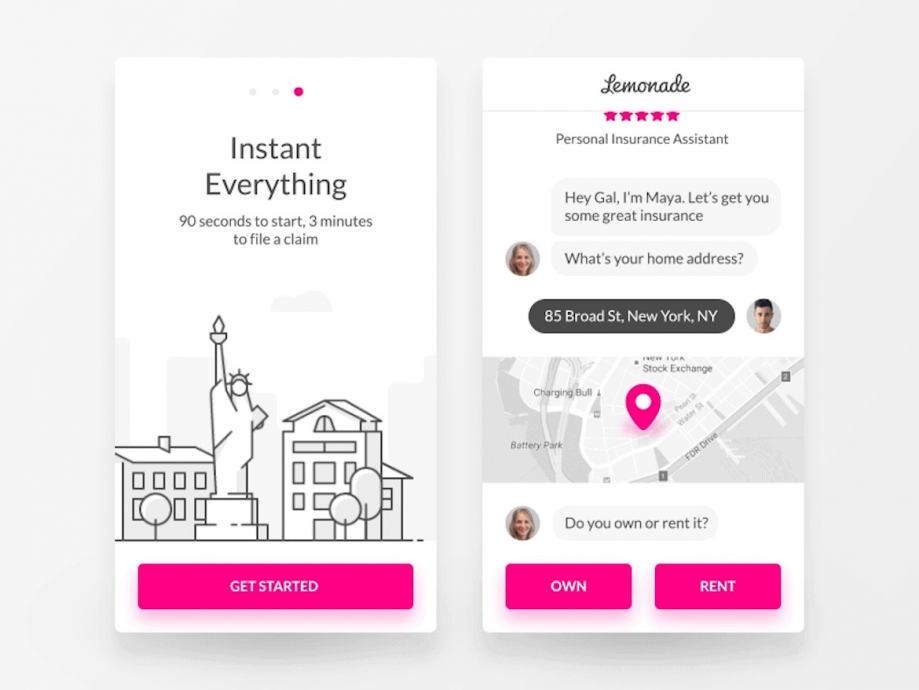

What’s the next big thing in how robots will help us run successful business?

Companies who cooperate with one another to provide a more holistic service experience to their customers will be the successful ones.

For example, if I have a medical device at home, it should be able to connect with my robot-operated systems, my digital doctor and maybe even with the health insurance agent.

So that information about my health condition is provided to all relevant companies without my having to repeatedly share the same information to them individually.

Whoever designs a robot that can do multiple things will also be successful. Wouldn't it be cool if we had an entity that could control or sync with all our devices and machines as well as assist the user or their whole family? Such an entity would help us conduct our everyday “orchestra”.

Ford and Agility Robotics develop a robot for people in the middle (image: Ford)

We hope that the interview with Hadar Maximov provided you with an interesting read and that you learned something new about human-robot interaction. We will also be posting our next interview with another WUD 2020 speaker for you to read soon.

You can find our previous interview with WUD 2020 speaker Jane Ruffino about the fascinating world of UX writing here.